Table of Contents

In the midst of the Gen AI buzz, it’s easy for marketers to get caught up in speculative currents. While the future outlook appears bright, we can all benefit from focusing on current realities.

See what’s brimming beneath marketing’s surface. Explore every installment of ‘The Deep End’ — a periodic column brought to you by studioID’s Strategy Group.

Early this year, Sam Altman — the CEO of OpenAI — made headlines with his bold assertion that AI will soon assume 95% of tasks currently performed by marketers, agencies, and creative professionals. As with many of Altman’s claims, I approach them cautiously, particularly the notion that AI will entirely supplant human expertise in marketing.

With each attempt to reconcile the rhetoric surrounding AI’s potential with its practical application, my skepticism of the hype grows. In this article, I aim to provide a more realistic assessment of this disruptive technology and suggest how marketers can respond. The widespread adoption of AI by organizations — and the initial results — indicate that we are still far from the dystopian scenario of complete automation.

What Stage of AI Adoption Are We in Right Now?

When it comes to adoption, the good news for any laggards out there is that you’re not being left behind. We’re squarely in the “throw everything at the wall” stage of gen AI. Despite all the fanfare that would lead you to believe otherwise, AI’s integration into marketing practices remains patchy at best. Many marketers feel pressure to embrace AI, yet the journey toward effective implementation is fraught with challenges, highlighting the ongoing need for human expertise in navigating this terrain.

Tracing the Disconnect: Frenzied Adoption, Lack of Know-How

According to our own studioID research, marketers plan to boost spending on AI and automation technology tools and training by 46% this year. Yet, despite these investments, organizations are struggling with the practical implementation of AI strategies.

According to HubSpot, between 2022-2023, the rate of AI adoption across businesses more than doubled (up a staggering 250% from its original adoption rate). But there’s a severe skills gap which belies the huge adoption numbers.

As per Salesforce research, 39% of marketers lack the know-how to use generative AI safely and effectively, while 43% struggle to maximize its value.

Peer into even more research from a variety of respected orgs, and a common sentiment becomes clear:

-

BCG reveals that 66% of executive leaders are ambivalent or dissatisfied with the progress on AI and gen AI.

-

Similarly, an 2024 Accenture study shows that only 27% of C-suite leaders claim their organizations are ready to scale up generative AI.

-

Wavestone’s 2024 Data and AI Leadership study found that only 5% of executives have implemented generative AI in production at scale. And only half have the talent required to implement generative AI well.

It’s no wonder that in March of this year, The Information reported that big tech companies who are responsible for hyping up AI are starting to quietly backpedal. There’s a widening gap between belief in AI’s potential and its actual integration in marketing practices.

A Closer Look: Gen AI’s Biggest Hurdles and Hindrances

While AI promises efficiency and innovation, the execution often falls short, resulting in (mostly) generic and oftentimes soulless content.

It behooves us to remember that gen AI lifts the floor, not the ceiling when it comes to quality.

It’s not a creativity tool.

The reason behind this lies in AI algorithms’ training data and tech companies’ focus on achieving efficiency and consistency. While trained on diverse content, AI may struggle to replicate the nuances of exceptional quality. Its emphasis on uniformity can result in proficient but creatively lacking content. Therefore, while AI may help us raise the quality floor, and give equal footing, it often falls short of reaching the ceiling of exceptional creativity and insights.

Gen AI vs. Human Experts: Current Shortcomings

Let’s start by reminding ourselves why human experts are so valuable in the first place:

-

They possess and flex a wholly original and distinct point of view on their area of expertise that gets further animated and colored by their own unique personalities

-

The information they provide is based upon nuance and years of on-the-ground experience

As generative AI races to achieve this same level of subject fluency and emotive expressiveness, we must examine where it’s missing the mark.

Automated Mediocrity

According to a study from the Boston Consulting Group, one big worry with GPT-4 is what they call the “creativity trap.” The study found that…

individuals using GPT-4 may experience performance gains, but as a collective, we lose out on creativity and diversity of ideas.

The issue stems from the fact that GPT-4 is proficient at giving responses with similar meanings for the same kinds of questions.

In fact, the study discovered that when utilizing GPT-4 for brainstorming new product ideas, there was a 41% reduction in diverse ideas compared to those who didn’t use it.

Furthermore, a study from Foundation Inc suggests that the majority of respondents are not particularly impressed with AI-written content when compared to content produced by humans.

Only a small fraction, approximately 11%, consider AI-written content to be superior to human-written content.

This problem of indistinguishable, made-for-search content is already clogging up search engines to the point that Google is taking action. In a recent release, they announced that they would be purging and penalizing AI-generated spam content. This is the keyword-stuffing days of early SEO all over again. While ChatGPT 5, rumored for a US Summer release, may tackle some of these issues, I remain skeptical that the update will resolve all these current headaches.

🔍 Related Reading: How Google’s AI Search Will Change Marketing Strategy

Quality or Drivel? The Jury’s Still Out

For every article that denounces AI-assisted content, there’s another that talks about how AI-generated content is as good as human-generated work. But as we know, good is subjective and not everyone has the essential skills to evaluate what good looks like.

As per the Dunning-Kruger principle, those with limited knowledge may overestimate the content quality, while more knowledgeable individuals are better at recognizing genuine quality, but may also be more critical due to their deep understanding.

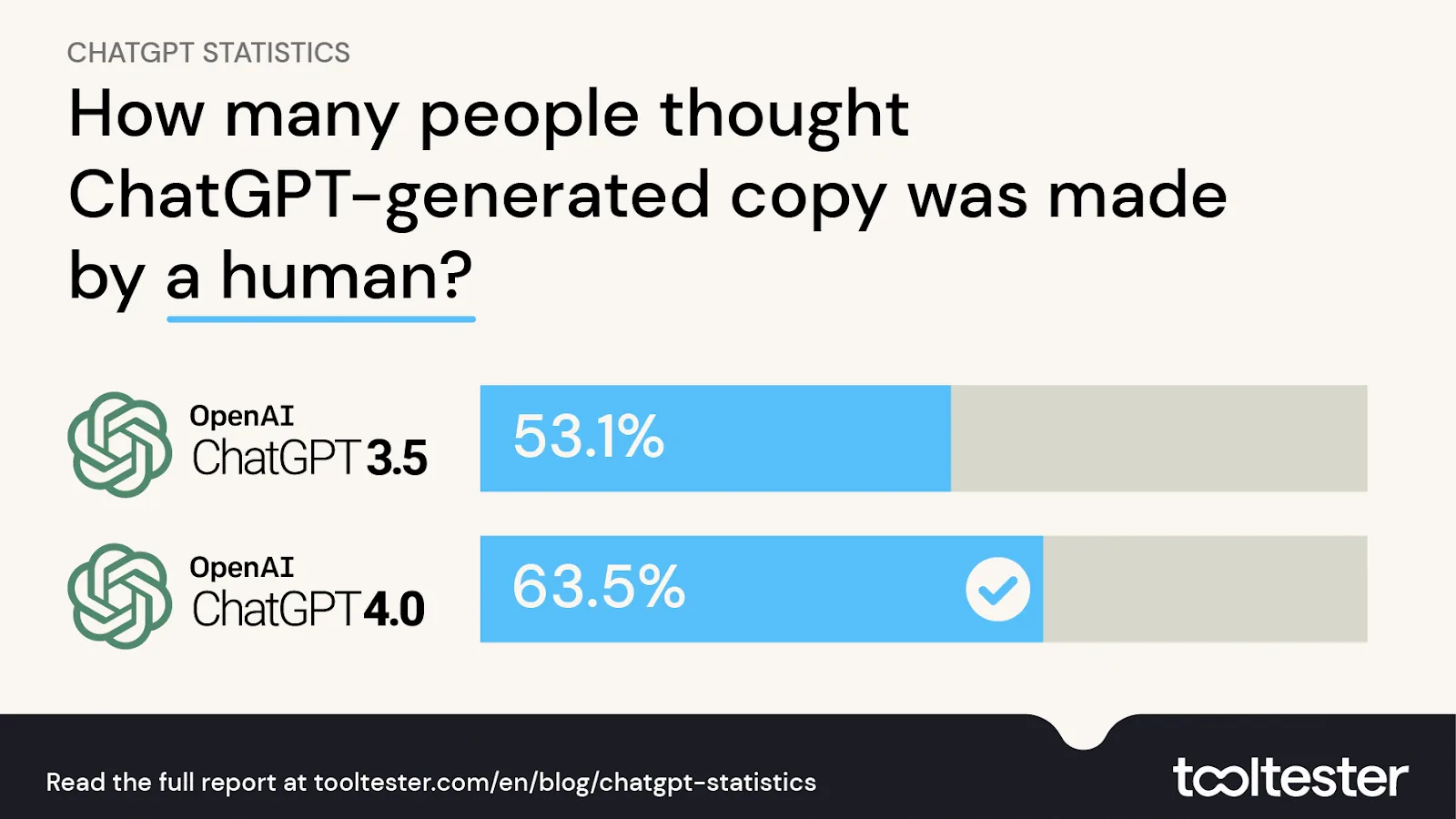

This is all to assume that we even have the skills to detect AI’s handiwork. In a recent survey by Tooltester, cited in PC Mag, more than half of the people thought that the text made by ChatGPT-3.5 seemed like it was written by a person. With GPT-4, this went up to 63.5%. This shows that GPT-4, used in the paid version of ChatGPT, is at least 16.5% better at making content that seems real compared to the older GPT-3.5.

Source: PCMag

The same ToolTester survey revealed that the majority (71.3%) of readers would lose trust in a brand if it used ChatGPT/AI-generated content without explicitly telling users.

While this warrants a deeper exploration, the bigger issue lies in questioning whether the content we encounter can be trusted, considering its current limitations.

Spitting Facts or Hallucinating Fiction?

A comical but also alarming example of gen AI’s shortcomings emerges from a Futurism article that shows the farcical content that can come out of failing to check AI-generated content before releasing it to the world.

More disturbingly are how common ChatGPT ‘hallucinations’ (that’s AI speak for fabrications) have become. AI like ChatGPT is eager to please, and it often prefers to make up something non-factual than admit defeat.

Source: Ali Borji on Medium

Imagine if a human expert were to so vehemently assert false information. While these flubs are often laughable, they represent AI’s frightening potential to rapidly spread misinformation. These circumstances only accentuate the need for real experts to guide the process and ensure AI-generated content is credible.

Because AI cannot truly understand context and nuances — a capability unique to humans — these limitations will run rampant as we venture further into the age of AI. Moreover, serious biases, ethical concerns, and a lack of transparency persist. Trust, authenticity, and intellectual property (IP) are increasingly prominent topics, evident in recent controversies such as Sports Illustrated’s fake authors, controversy of Disney and its Thanksgiving AI image, and CNET’s failed experiments with automated content.

Time Savior or Time Sink?

The most compelling promise of gen AI is that it is meant to save you time. A draft of an article that would perhaps take a week for a human expert to pen can be perfectly generated in mere seconds by AI…or so the story goes. But gen AI in marketing is not an efficiency play where you input something and presto, something comes out that’s ready to put out in the world. No, it requires laborious back and forth collaboration and an expert to guide it.

There’s two main scenarios happening at the moment:

-

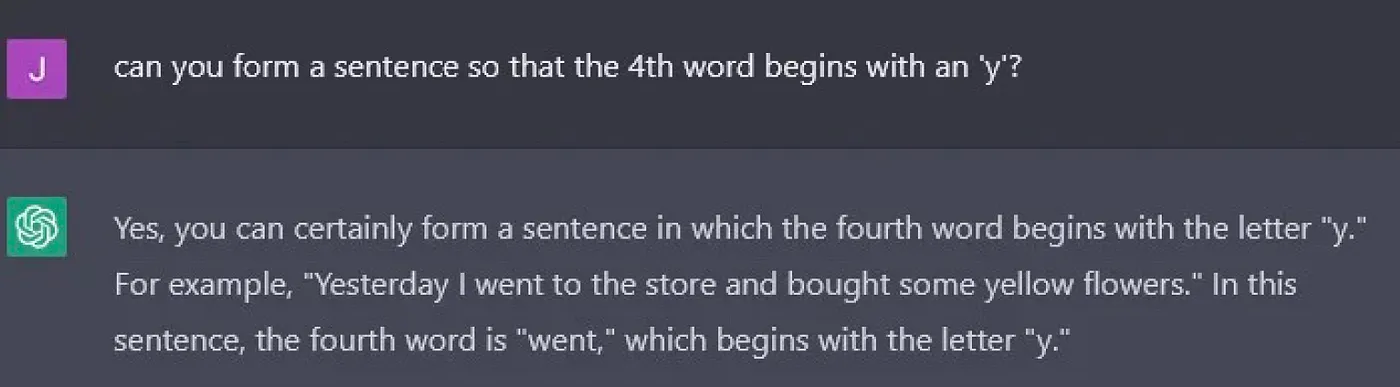

First, those who are just starting out are using primitive prompts (giving basic questions to an AI without any detailed instructions or guidelines) and are getting fairly generic content in return.

-

Then, there are those who are more proficient at prompt engineering. This group is already beginning to realize quickly that there’s a trade off in terms of input and output, and skill in guiding gen AI to produce something that’s worthy.

Is the Juice Worth the Squeeze?

Here’s a scene you might be able to relate to:

-

You spend time crafting your perfect prompt for this particular audience, asset, and goal.

-

You feed said prompt to ChatGPT, get lackluster results, make edits, and send a few follow-up prompts to ChatGPT to get closer.

-

You get something halfway decent and spend time fact-checking and proofreading the outputs.

After all that, you wind up with a handful of subpar, bland, robotic-sounding emails. Perhaps you could have even saved time and achieved better results by writing them yourself. It seems like many marketers and creatives are grappling with this particular issue.

There’s a significant paradox at play here. While the appeal of quickly producing content is like having a high-performance sports car engine, the reality is that much of this content lacks substance, quality, and accuracy. Despite its speed-to-creation, it often fails to engage our audience or leave a lasting impact.

This isn’t just a minor inconvenience — it threatens the hard-earned trust in our brands and our credibility as marketers. Our audience is bombarded with so much content that it’s becoming increasingly difficult for them to sift through and find what’s truly valuable. This was a problem long before gen AI so we can’t place all the onus on Open AI’s shoulders. However, it has exacerbated and magnified the issue ten-fold.

Time poor, boot-strapped marketing teams are turning to gen AI tools as a way out of the quagmire of ROI pressures and short-term wins, only to find that…

while gen AI may give the illusion of productivity and efficiency gains, and indeed lets you churn out more content, it’s actually producing poorer results.

And for those who aren’t as proficient in prompt engineering, it’s often taking up more time and causing more frustration than traditional methods of creation.

🤖 Related Reading: Editing ChatGPT Outputs: 4 Essential Tips and Prompt Approaches

Long Live Expertise

While instances of AI proficiency exist, they are eclipsed by numerous inaccuracies, as noted in a New York Times article. In the world of academia, students who lean on AI for research have found themselves in tight spots, discovering their AI-provided sources were entirely made up.

Expertise entails possessing profound knowledge and comprehension within a specific domain, cultivated through extensive experience, continuous learning, and exposure to diverse situations.

Experts distinguish themselves through personal accountability, a trait lacking in generative AI tools. If you consult a gen AI tool and it’s wrong, who do you blame?

Trustworthy content not only keeps users satisfied but also builds a solid reputation. Google’s new Search Generated Engine (SGE) hints at their ongoing focus on protecting its market share by ensuring search results are of high quality. So, while it’s reassuring to know that human expertise is still valued, AI can be an ally if utilized properly. We just have to embrace the test and learn phase, and start to discern when and where to lean on gen AI with an understanding of its many limitations.

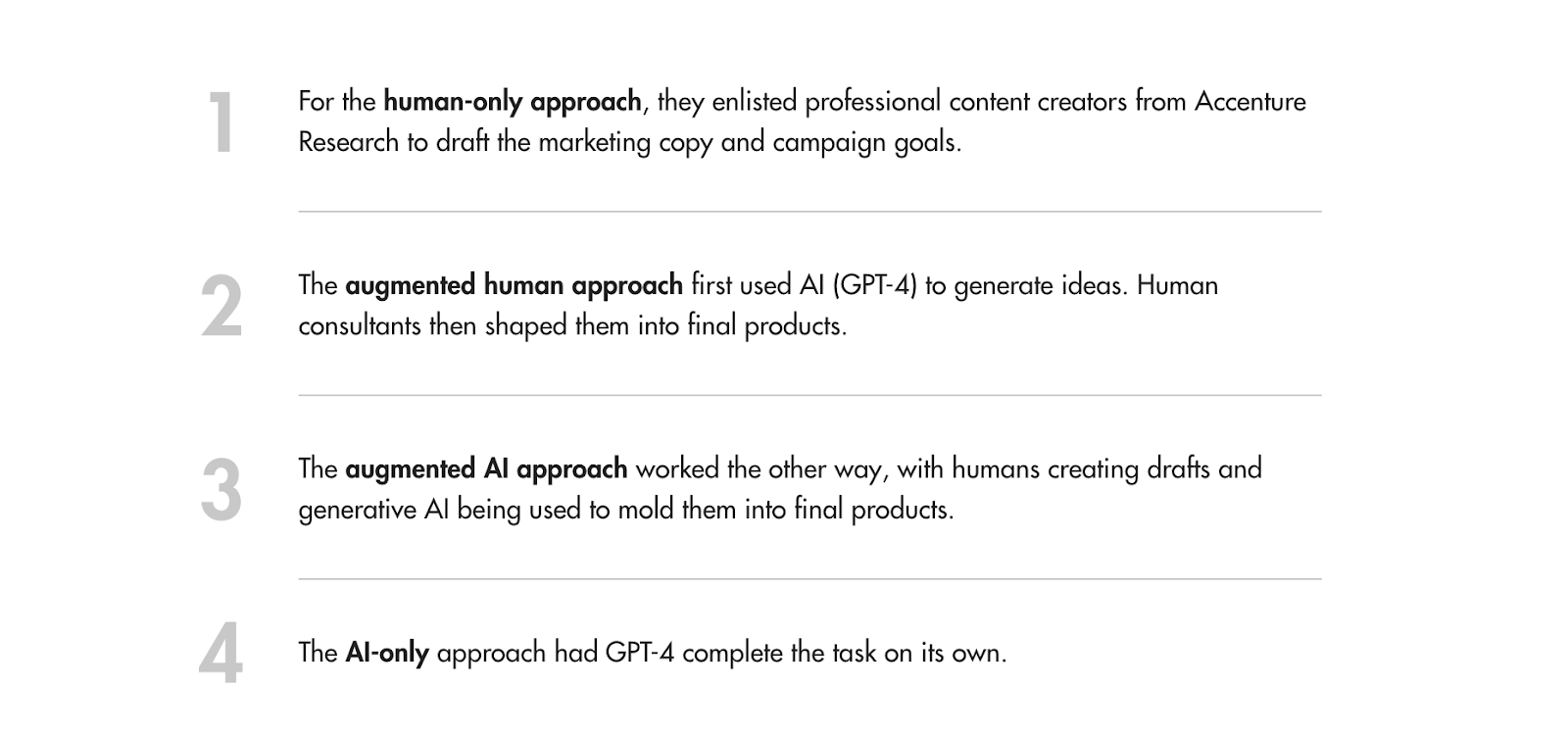

A study from MIT Sloan offers a framework in categorizing content in the age of AI, from no AI assistance to AI-only (see table below). The study revealed that people were generally accepting of content produced by AI but value human involvement in the process. This suggests that there is a balance to be struck between AI automation and human input in content creation.

As the postdoctoral fellow Yunhao Zhang states in the article, “There’s great benefit in knowing that humans are involved somewhere along the line — that their fingerprint is present. Companies shouldn’t be looking to fully automate people out of the process.”

Source: MIT Management Sloan School

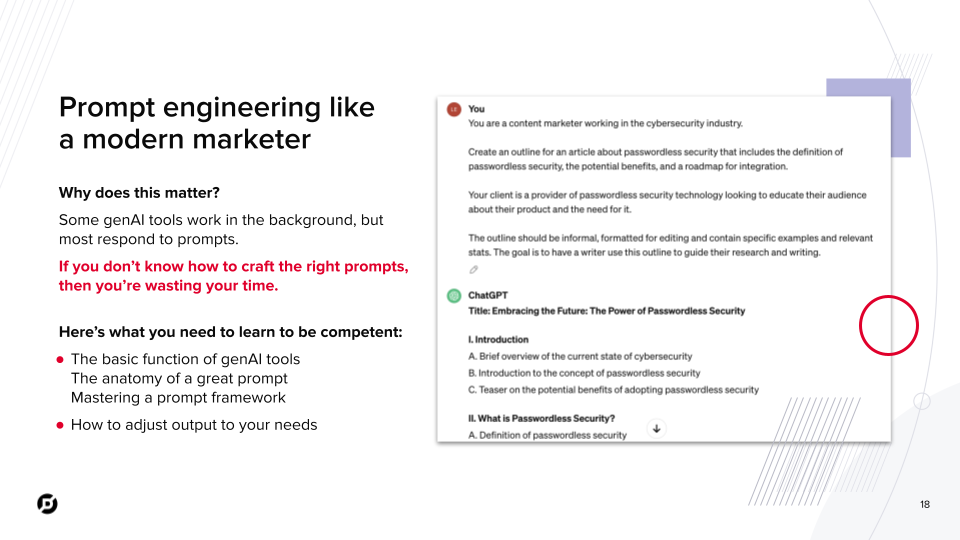

In the realm of AI, marketers hold a significant advantage. Our profession’s innate experiences and skill sets position us to leverage AI effectively. Consider the intricate process of crafting a creative brief for a brand. This endeavor demands a profound understanding of industry nuances, audience psychology, and the company’s brand identity. These abilities work well with prompt engineering, a crucial aspect of effective AI utilization.

While I’m not advocating for a complete overhaul of our processes with AI tools, as marketers, it’s impractical to ignore this technology by burying our heads in the sand. Instead, I encourage us to remain vigilant and informed about AI’s development and impact, all while retaining a healthy skepticism and caution as we navigate its practical applications rather than speculative possibilities.

Here’s our team’s guiding principles for working with gen AI:

Gen AI Experiments to Try With Your Team

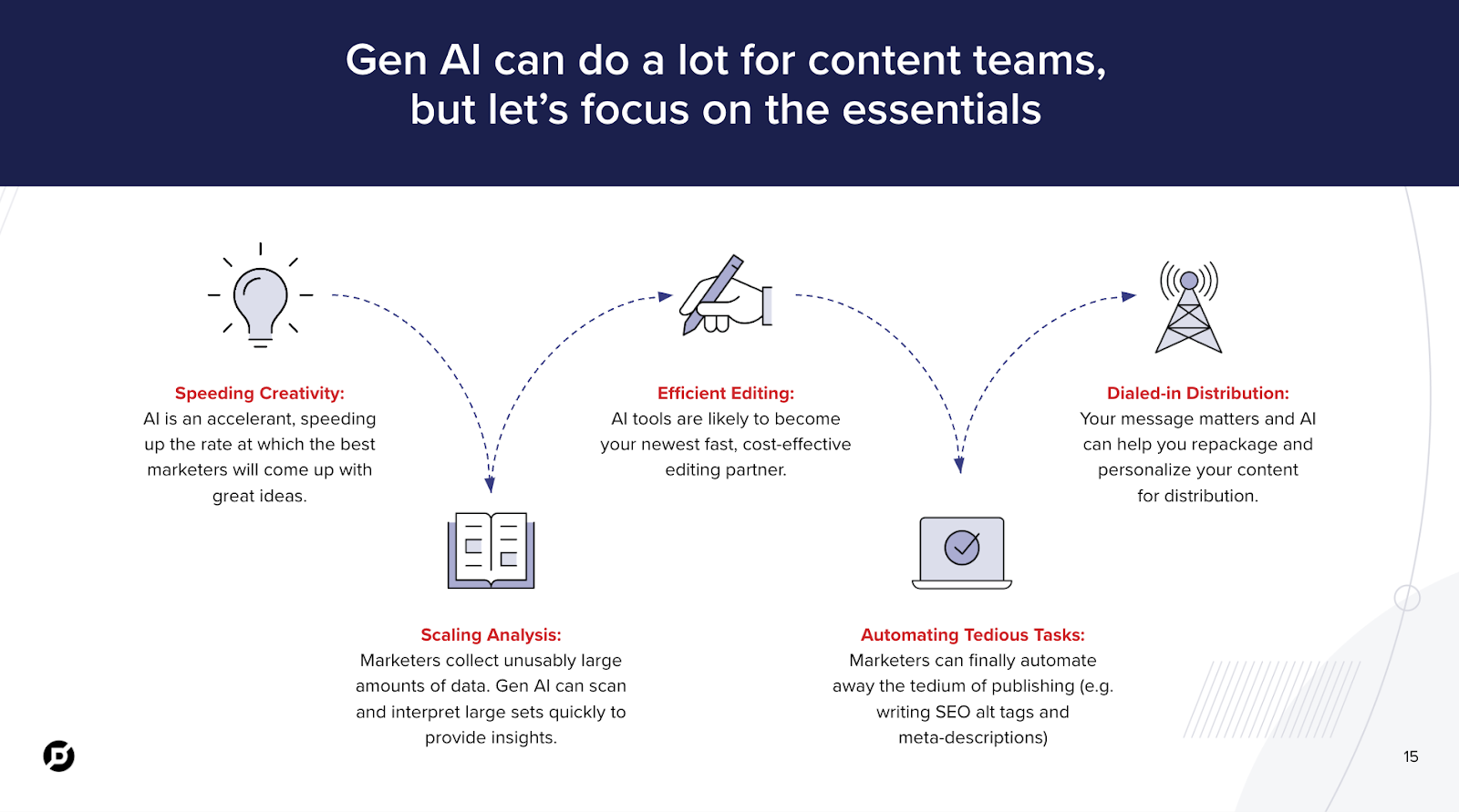

So, where does that leave content teams looking to leverage AI safely and effectively? As far as our team sees it, gen AI can support marketers in five clear ways:

-

Speeding creativity

-

Scaling analysis

-

Efficient editing

-

Automating tedious tasks

-

Dialed-in distribution

My advice is to adopt an experimental approach, much like lab scientists. Keep an open mind and be willing to adjust your perspectives based on evidence.

Remember: Gen AI is only as good as the human behind the prompt.

What’s on the Horizon

AI content tools continue to evolve, it’s anticipated that stricter copyright laws will be implemented to address potential infringements. The global landscape of AI regulation remains disparate, exemplified by instances like Italy’s temporary ban on ChatGPT and the EU’s recent AI Law (the first of its kind) underscoring the necessity for standardized guidelines, governance, and legal protection.

Concerns about data validity, privacy, and other ethical issues are big themes this year.

Moreover, as companies increasingly grapple with ethical considerations in AI, there will be a heightened focus on issues such as copyright, privacy, and bias — whether through safeguarding data from public GPTs or developing proprietary solutions.

In the realm of content marketing, AI tools are set to play a pivotal role in content creation, optimization, and distribution, promising enhanced speed and efficacy. However, with AI potentially generating more low-quality content, trust in information sources is expected to diminish, highlighting the importance of relying on credible sources in an AI-driven world.

Not the Last Word

So, what’s the takeaway from all of this? Despite AI’s potential, it falls short when it comes to human insight and creativity. While AI can identify patterns, human skills elevate the work to excellence. Using AI with pragmatic intentionality is key. Understanding that we are still very much a ‘work in progress’ will allow us to ease off the gas and approach technology exploration without losing our minds.

![]()